作者:复睿微首席架构专家 Erick.X

注:英文原文附在中文翻译版本后

一、虚拟化概述

1.1 驱动力和优势

虚拟化是一种广泛使用的技术,支撑了几乎所有现代云计算和企业基础设施。开发人员基于虚拟化功能可以在单台机器上运行多个操作系统,从而完成软件测试而不会存在破坏主计算环境的风险。虚拟化为芯片和基础设施带来了许多特性,包括良好的隔离性、不同资源的可及性、同一资源的工作负载平衡、隔离保护等。

对于汽车芯片,尤其是座舱域SoC,当前的发展趋势是通过提高集成度来节省成本,因此在同一个SoC芯片上会集成多个操作系统对应的物理资源,这些资源需要在虚拟化环境中安全隔离的情况下共存。具体而言,车载SoC虚拟化需要面临的要求和挑战有:

- 至少三个具有不同安全要求和异构操作系统的域之间的隔离,运行RTOS操作系统的需要满足ASIL-D安全等级要求的安全岛,运行QNX或轻量级Linux操作系统的需要满足ASIL-B安全等级要求的仪表域,运行Android或鸿蒙操作系统的信息和娱乐域系统。

- 关键资源的可及性和工作负载平衡,包括内存、CPU工作线程等,尤其是在GPU或NPU处理巨大工作负载时。在功率约束和内存容量限制下,通过虚拟化来动态保证资源的可用性和灵活性。

1.2 Hypervisor简介

Hypervisor是虚拟化功能最核心的支持组件。Hypervisor主要负责处理虚拟机下陷和管理实际物理资源等功能。Hypervisor主要可以分为两大类:

- 原生独立的Hypervisor,一般称为Type1 Hypervisor。这类Hypervisor以最高权限运行,控制和管理所有物理资源。在这类Hypervisor运行过程中,与每个虚拟机相关的资源管理和调度功能可以卸载到该虚拟机的操作系统中实现,这样管理程序可以专注于与虚拟化相关的功能。这类Hypervisor典型的案例有QNX Hypervisor和Xen Hypervisor等。

- 主操作系统内嵌Hypervisor扩展,一般称为Type2 Hypervisor,基本管理功能可以通过主机操作系统实现,Hypervisor扩展只专注于虚拟化支持,与主机操作系统配合实现虚拟化功能。这类Hypervisor典型的案例有Linux KVM等。

对应于ARM异常处理体系结构,通常虚拟机操作系统的应用程序或用户空间处于EL0安全等级。虚拟机操作系统的内核态空间处于EL1安全等级。为了支持虚拟化扩展引入EL2安全等级。具体安全等级细分如下图所示:

- 对于Type1 Hypervisor:属于虚拟机操作系统用户态空间在EL0安全等级,虚拟机操作系统内核态在EL1安全等级,独立的Hypervisor在EL2安全等级。

- 对于Type2 hypervisor:虚拟机操作系统用户态空间和宿主机操作系统的用户态空间都在EL0 安全等级,虚拟机操作系统内核态在EL1安全等级。带有Hypervisor扩展的宿主机操作系统内核态空间在EL2安全等级。

图1. Hypervisor类型和对应ARM异常处理等级

从不失一般性出发,后续章节将以 QNX Hypervisor相关的软件实现和基于ARMv8.0体系结构的硬件实现作为典型示例来介绍当前虚拟化的主要问题和解决方案。根据虚拟化场景的功能划分,当前主流座舱SoC的虚拟化大致可以分为三类:

- 用于VM管理和执行的CPU虚拟化;

- 用于内存空间分离和管理的内存虚拟化;

- 以及用于设备配置和管理的设备虚拟化。

二、CPU虚拟化

2.1 CPU虚拟化概述

CPU或处理器可以为VM提供虚拟处理器的抽象,并执行特定VM的相应指令。通常来说,Hypervisor管理程序直接在物理CPU上执行,占用物理资源并直接使用物理CPU的ISA。而虚拟机操作系统基于虚拟处理器的抽象占用相关资源并执行相关指令,除了需要更高权限的动作外,大部分场景也可以直接使用物理CPU资源和物理CPU的ISA。

Hypervisor和VM的协同管理是通过虚拟机下陷机制实现的,通常这种下陷是VM或应用需要更高的权限才能执行的时候会产生。在ARM架构中,上面介绍的对虚拟化的异常处理等级,VM和应用程序最多可以拥有EL1权限,因此对于不允许执行的指令,将触发虚拟机陷阱。详细流程如下:

- 通常情况下,VM在物理CPU上运行其指令,与没有Hypervisor的情况下一样;

- 当虚拟机操作系统或应用程序试图执行一条超出执行权限的指令时,触发虚拟机下陷,虚拟机操作系统做上下文切换,切到Hypervisor程序;

- 发生虚拟机陷阱后,Hypervisor接管现场,并保存虚拟机的上下文,之后处理虚拟机操作系统开启的任务;

- 当Hypervisor完成任务后,恢复VM的上下文并将执行权限交还给VM。

图2. 虚拟机下陷机制和处理流程

虚拟机抽象和下陷处理机制将在后续QNX Hypervisor和ARM体系结构中进一步介绍。

2.2 QNX虚拟机和虚拟处理器支持

QNX Hypervisor软件架构中资源和组件的详细层次结构如下图所示,从Hypervisor作为操作系统视角来看,各个VM需要通过例化qvm进程来在Hypervisor操作系统用户态空间中注册。在配置某个VM时,会根据规范创建一个对应的qvm进程并进行配置,用以指定该VM的组件,包括虚拟虚拟处理器vCPU、虚拟设备、内存管理页表配置等。

图3. QNX Hypervisor资源层级示意图

在Hypervisor正常运行期间,qvm 进程实例需要执行以下操作:

- 捕获从虚拟机出入的访问尝试,并根据类型进行相应的处理;

- 在切换物理CPU之前保存VM的上下文;

- 在物理CPU重新执行某个VM之前恢复该VM的上下文;

- 负责虚拟化相关故障处理;

- 执行确保虚拟机完整性所需的维护程序。

在一个qvm进程被例化的同时,会在进程内实例化多个vCPU线程、虚拟设备列表和Stage2页表,分别用于应用线程抽象、虚拟设备抽象和内存虚拟化抽象。对于vCPU抽象,QNX Hypervisor遵循基于优先级的vCPU共享模型,其中优先级包括qvm进程优先级和vCPU线程优先级。在Hypervisor运行规则中,qvm进程的相对优先级和qvm 进程内的 vCPU调度线程的优先级层次化地决定哪个vCPU可以访问物理CPU。但是映射过程和后续执行过程中,VM中运行的内容和数据对于Hypervisor来说是完全的黑盒。Hypervisor仅确保在基于 vCPU的优先级和调度策略共享物理CPU时,较高优先级的vCPU将始终抢占较低优先级的vCPU。除此之外的虚拟设备列表和Stage2页表将在后续章节介绍。

2.3 ARM 虚拟机和虚拟处理器支持

ARM架构中的下陷机制是通过异常处理来实现的。如上文所述,通常虚拟机操作系统的应用程序或用户空间处于EL0安全等级。虚拟机操作系统的内核态空间处于EL1安全等级。Hypervisor处于EL2安全等级。如下如左边所示,当超出EL1安全等级的VM或者应用程序指令执行时,将向 EL2级别的Hypervisor发出异常下陷,交由Hypervisor来处理异常,然后通过上下文切换返回到EL1安全等级的VM。

下图右边示例了一个CPU捕获WFI的处理过程。执行等待中断WFI指令通常会使物理CPU进入低功耗状态。通过注入断言TWI信号,如果满足HCR_EL2.TWI==1,则在EL0或EL1安全等级上执行WFI将导致异常并下陷到EL2安全等级上处理。在此示例中,VM通常会在空闲循环中执行WFI,而Hypervisor可以捕获此类下陷动作,并调度不同的vCPU到这个物理CPU,而不是直接进入低功耗状态。

图4. 下陷机制和WFI下陷示例

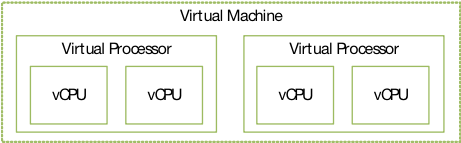

在ARM体系结构中,vCPU一般代表虚拟的处理单元,每个vCPU在Hypervisor中需要例化对应的vCPU线程。VM对应的是Hypervisor中例化的qvm进程,会包含一个或者多个vCPU线程。

图5. ARM虚拟机和虚拟处理器层级关系

三、内存虚拟化

3.1 内存虚拟化概述

内存虚拟化配合CPU虚拟化,可以保证所有VM都有独立的内存空间,并严格按照权限进行隔离。而内存虚拟化的关键要求是地址管理,根据上下文配置和控制各个VM对物理内存的访问。

内存虚拟化通常是与通过内存分配和释放的管理相结合来实现的。从硬件实现视角,通常的内存虚拟化是通过控制两级地址翻译来实现的。Hypervisor和VM的内存分配和释放机制都和典型的操作系统内部内存分配和释放的机制类似,使用基于分级页表实现内存管理。

3.2 ARM内存管理体系

ARM架构中采用基于页表的两级地址翻译机制。通常来说,第一层级转换将虚拟地址VA转换为中间物理地址IPA,这一层级地址翻译由操作系统管理和控制。而第二层级地址翻译则将中间物理地址IPA转换为物理地址PA,这一层级地址翻译由Hypervisor管理和控制。这两个阶段的翻译是相互独立,互不干扰的。如下图6所示,连续的虚拟页地址可以映射到离散的中间物理页中的地址,而离散的中间物理页地址可以映射到连续的物理页地址。

图6. 两级地址翻译机制

下图7显示了ARMv8架构的典型两级地址空间。其中,虚拟地址空间主要代表有:

- 位于非安全EL0/EL1安全等级中的虚拟机操作系统虚拟内存映射空间;

- 位于非安全EL2安全等级中的Hypervisor虚拟内存映射空间;

- 位于EL3安全等级中的安全监视器虚拟内存映射空间。

这些虚拟地址空间都是相互独立的,并且每个内存空间都有独立的配置和页表。图示的两级页表可以比较完整地支持内存虚拟化功能,其中Stage1虚拟机操作系统页表可以将虚拟地址转换为中间物理地址,包括串口设备地址、内存地址和存储设备地址,而Stage2虚拟化页表,结合Hypervisor页表和安全监视器页表,可以将中间物理地址转换为合法的物理地址。

图7. ARMv8典型的两级地址空间

在AArch64架构中,物理地址通常为48bits,页表大小通常为4KB或者64KB,为避免页表空间太大,通常采用4级页表划分,按照地址段进行页表项查询。下图为4级页表和第一层级地址翻译示例,具体内容本文不再赘述。

图8. AArch64 4级页表和第一层级地址翻译示例

四、设备虚拟化

4.1 设备虚拟化概述

虚拟化中Device的概念可以概括为系统中除了运行Hypervisor和VM的处理器之外的可访问内存的设备。在座舱芯片中,典型的Device类型可以总结为:

- 软件模拟虚拟化设备:借助软件模拟或硬件虚拟化的方法捕捉原生驱动,之后在Hypervisor内模拟虚拟设备的行为,所有虚拟机都通过管理程序陷阱与该设备交互;

- 半虚拟化设备:为所有虚拟机模拟一个实际的物理设备,所有虚拟机都可以通过一次下陷与该设备进行批处理调用;

- 直通设备:被虚拟化环境中的特定虚拟机完全独占;

- 共享设备:可以供一个VM使用,也可以供一个或多个VM和Hypervisor本身使用。

后续章节将详细介绍QNX Hypervisor中设备虚拟化的具体实现以及在ARM体系结构下对于设备虚拟化的支持。

4.2 QNX设备虚拟化支持

QNX Hypervisor中的设备可以归纳为:

- 物理设备,包括直通设备和共享设备,

- 虚拟设备,包括全虚拟化和半虚拟化设备。

如前面所述,QNX Hypervisor在为VM配置和实例化qvm进程时,需要将物理设备和虚拟设备vdev配置给qvm进程和VM。下图为QNX Hypervisor中对于不同种类设备虚拟化的支持。

图9. QNX Hypervisor中的设备虚拟化支持

对于直通设备,VM具有直接且独占的访问权限,并且Hypervisor主机操作系统将被绕过。这类直通设备的驱动程序也将由VM直接拥有。对于直通设备,Hypervisor只需要将来自物理设备的中断直接路由到对应的VM,并将来自VM的所有信号直接传递到对应的设备。所有交互都在VM和设备之间,Hypervisor需要识别并允许通过来自设备的中断和来自来VM的信号。直通设备的典型示例是 PCIe、以太网等。

对于共享设备,这些设备可以被多个访客使用,典型示例是共享内存。QNX Hypervisor支持两种不同类型的设备共享,引用共享和中介共享:

- 引用共享:共享设备作为直通设备分配给一个特定的VM,该VM也管理该设备的驱动程序,其他授权的VM将通过虚拟设备访问该设备。

- 中介共享:所有授权的VM通过虚拟设备访问该设备,Hypervisor控制该设备并管理驱动程序。

对于虚拟设备,QNX Hypervisor支持全虚拟化和半虚拟化设备,用来隔离系统上物理设备之间的直接通信:

- 全虚拟化设备/软件模拟虚拟化设备:为VM模拟实际物理设备的虚拟设备。使用此类设备时,VM不需要知道它在虚拟化环境中运行。根据物理设备的类型,全虚拟化设备可以自己处理所有事务,也可以充当VM和实际物理设备之间的中介。典型示例包括中断控制器GIC、计时器Timer等。

- 半虚拟化设备:与全虚拟化设备相比,半虚拟化设备可以通过单次异常下陷批量处理设备调用,从而提高效率,减少因为虚拟化而额外引入的异常下陷下陷。QNX Hypervisor支持基于VirtIO1.0标准的半虚拟化设备,包括典型的块设备、I/O 设备、控制台、GPU、DPU、ISP等。

4.3 ARM设备虚拟化支持

ARM架构中针对设备虚拟化提供了多方位的支持,其中主要的机制包括系统内存管理单元SMMU和支持虚拟化的中断控制器GIC。

对于SoC系统中除处理器以外的设备,尤其是DMA控制器或具有DMA控制器的设备,在虚拟化场景下由VM分配时,可以通过SMMU保证地址转换和OS级别的内存保护。下图左图显示了操作系统视角下不带虚拟化支持的DMA控制器。DMA控制器将通过主机操作系统内核空间中的驱动程序进行编程,该驱动程序将根据MMU单元为DMA配置物理地址。但是在虚拟化环境中,特定VM的视角下,直通DMA只能基于该VM所对应的第一层级MMU做地址翻译,从而只能配置中间物理。因此,下图右图显示了用于该 DMA 控制器的虚拟化支持的系统内存管理单元SMMU,可以在支持VM直接配置直通DMA。所有的内存访问都可以通过管理程序主机编程的SMMU从IPA转换为PA,以确保VM和DMA都是基于IPA进行地址操作而保持统一的视角。

图10. ARM架构中SMMU对设备虚拟化的支持

从Arm GICv2开始,如下图所示,GIC可以通过提供物理CPU接口和虚拟CPU接口来发出物理和虚拟中断信号。从GIC功能来看,这两个接口是相同的,区别是一个发出物理中断信号而另一个发出虚拟中断信号。Hypervisor可以将虚拟CPU接口映射到VM,允许该VM中的软件直接与GIC通信。这个机制的优点是Hypervisor只需要设置虚拟接口,而不需要采用全虚拟化的方式来支持GIC。可以减少中断下陷的次数,从而减少中断虚拟化的开销。

图11. ARM架构中GIC对设备虚拟化的支持

五、结语

虚拟化正迅速成为现代车载场景软件架构中的一项关键技术,尤其是座舱SoC当前面临着安全隔离、灵活性和高利用率的挑战。Hypervisor软件与硬件虚拟化扩展的配合,可以让座舱场景中的虚拟化特性更加高效、安全。

英文原文:

1. Virtualization overview

1.1 Motivation and Benefits

Virtualization is a widely used technology and traditionally underpins almost all modern cloud computing and enterprise infrastructure, which is used by developers to run multiple operating systems on a single machine, and to test software without the risk of damaging the main computing environment. Virtualization brings many features to chip and infrastructure, including isolation, high availability, workload balancing, sandboxing, etc.

As for automotive chips, especially the cockpit SoCs, cost savings via high levels of integration are driving the need for safe and secure co-existence of multiple operating environments on the same SoC chip. More specifically, the requirements and challenges of virtualization are:

- Isolation between at least three domains with different safety requirements and heterogeneous operating systems, the ASIL-D requirement for safety island RTOS, the ASIL-B requirement for cluster domain using QNX or lite Linux, the infotainment and entertainment domain using Android or Harmony Operating System.

- High availability and workload balancing on critical resources, including the main memory capacity, CPU working threads etc. especially when GPUs or NPUs are working with huge workloads. Under the power margin and memory capacity constraints, the resources availability and flexibility should be guaranteed through the virtualization dynamically.

1.2 Hypervisor Introduction

Hypervisor is the key part for virtualization to handle the virtual trap and manage the physical resources. Hypervisors can be divided into two broad categories:

- Native standalone hypervisor, or Type1 hypervisor, which runs with highest privilege, controls, and manages all physical resources. While the resource management and scheduling can be offloaded to the operating system of the VMs, the Type1 hypervisor can focus on the virtualization related functionalities. Typical examples are QNX hypervisor and Xen hypervisor.

- Hosted OS with hypervisor extension, or Type2 hypervisor, focuses on the virtualization support, cooperating with the host operation system. Typical example is the Linux KVM.

Corresponding to the ARM trust zone exception level, typically, the applications or the user spaces of the guest OSes are on the EL0 level. And the kernel spaces of the guest OSes (VM for shorted) are on the EL1 level. To support the virtualization extension, the EL2 level has been introduced for hypervisor. More specifically:

- For Type1 hypervisor: the use spaces belonging to Guest OSes are on the EL0 level. And the VMs are on the EL1 level as virtual machines. And the standalone hypervisor is on the EL2 level.

- For Type2 hypervisor: the use spaces belonging to Guest OSes and Host OS are both on the EL0 level. And the VMs are on the EL1 level as virtual machines. And the Host OS with the hypervisor extension are on the EL2 level.

Figure 1. Hypervisor Types Corresponding to ARM Exception Level

In the following chapters, the QNX hypervisor related software implementation and ARMv8.0 based hardware implementation will be used as the typical example for easier explanation. Based on the functionalities, the virtualization of modern on chip systems can be classified as the CPU virtualization for VMs’ management and execution, the memory virtualization for memory space separation and management, and the device virtualization for the device configuration and management. All these three types of virtualization will be introduced in next chapters.

2. CPU Virtualization

2.1 CPU Virtualization overview

CPUs or processors can provide the abstraction of the virtual processors for the VMs and execute the corresponding instructions of specific VM.

Typically, the hypervisor executes on the physical CPUs, occupying the physical resources and using the physical ISA directly. While the VMs occupy related resources and execute related instructions based on the abstraction of virtual processors, which can also use the physical CPUs directly in most of the scenarios, except for the actions that need higher authority.

And the cooperation and management of hypervisor and VMs are achieved through the trap mechanism, where the VMs or applications need higher authority for execution. In ARM architecture, exception level support for virtualization introduced above, the VMs and applications can on have up to EL1 authority, thus for instruction not permitted to execute, the trap mechanism will be used. Detailed procedures are:

- Usually, VM is running its instructions execute on a physical CPU, just as if the VM were running without a hypervisor.

- When the VM or applications attempts to execute an instruction not permitted to execute, the virtualization hardware trap takes place with guest exit and context switch.

- On the trap, the hardware notifies the hypervisor, which saves the VM’s context and completes the task the VM had begun but was unable to complete for itself.

- On completion of the task, the hypervisor restores the VM’s context and hands execution back to the VM (guest entrance).

Figure 2. Trap Mechanism for CPU Virtualization

The detailed support for the virtual machine abstraction and trap mechanism in the QNX hypervisor and ARM architecture will be introduced below.

2.2 QNX VM and vCPU Support

The detailed hierarchy of resources and components in QNX Hypervisor environment are listed in the below figure, from the point of view of the hypervisor itself, a VM is implemented as a qvm process, which is an OS process running in the hypervisor host, outside the kernel space. When configuring this VM, a qvm process will be created and configured according to specifications to specify the virtual components of the machine, including the virtual CPUs (vCPUs), virtual devices, memory management configurations etc.

Figure 3. Resource Hierarchy of QNX Hypervisor

During operation a qvm process instance does the following:

- Traps outbound and inbound guest access attempts and determine what to do with them.

- Saves the VM’s context before relinquishing a physical CPU.

- Restores the VM’s context before putting the guest back into execution.

- Looks after any fault handling.

- Performs any maintenance activities required to ensure the integrity of the VM.

Inside a qvm process, multiple vCPU threads are instanced for application thread abstraction, the list of pass-through devices, virtual devices and stage2 page table are used for device virtualization and memory virtualization, which will be introduced later.

For the vCPU abstraction, the QNX Hypervisor follows a priority-based vCPU sharing model, where the priorities consist of the qvm process priority and vCPU thread priority. In the hypervisor host domain, the relative priorities of qvm processes and the priorities of vCPU scheduling threads inside the qvm process determine which vCPU gets access to the physical CPU.

However, the hypervisor host has no knowledge of what is running in its VMs, or how guests schedule their own internal software. The hypervisor host only ensures that a higher priority guest OS will always preempt a lower priority guest OS when sharing a physical CPU based on the priority and scheduling policy of vCPUs.

2.3 ARM VM and vCPU Support

The trap mechanism in the ARM architecture is achieved through the exception handling. As descripted above, the applications or the user spaces of the guest OS are on the EL0 level. The VMs are on the EL1 level. The hypervisor runs in the EL2 level. When an exception caused by sensitive instructions beyond the authority of the VM in EL1 level happens, the trap to the hypervisor in EL2 level will be emitted. The hypervisor handles the exception and then returns to the VM in EL1 level by context switch.

Here has an example of trapping WFIs for CPU. Executing a Wait For Interrupt (WFI) instruction usually puts the CPU into a low power state. By asserting the TWI bit, if HCR_EL2.TWI==1, then executing WFI at EL0 or EL1 will instead cause an exception to EL2. In this example, the VM would usually execute a WFI as part of an idle loop. And the hypervisor can trap this operation and schedule a different vCPU instead

Figure 4. Trap Mechanism and Example of Trapping WFIs from EL1 to EL2

And in the ARM architecture, vCPU refers to virtual processing element, corresponding to vCPU thread instance for every vCPU. And virtual machine refers to the qvm process instance, which will contain one or more vCPUs.

Figure 5. ARM Virtual Machine and Virtual CPUs

3. Memory Virtualization

3.1 Memory Virtualization overview

Cooperating with CPU virtualization, the memory virtualization can ensure all the VMs have independent memory space and isolated strictly according to the authority. Therefore, the key requirement is the address management, controlling the access to physical memory by various system masters depending on contexts.

The memory virtualization is typically achieved through the combination of the memory allocation and free of hypervisor and VMs form software side with the 2-stage address translation mechanism from hardware side. The memory allocation and free mechanisms of hypervisor and VMs are both similar to this mechanism inside the typical operating system, using the page-based memory management.

3.2 ARM 2-Stage Address Translation

The 2-stage address translation mechanism is used in the ARM architecture. Typically, stage-1 translation converts virtual address (VA) to intermediate physical address (IPA), which is usually managed by the operating system. While Stage-2 translations convert the intermediate physical address (IPA) to physical address (PA), which is usually managed by the hypervisor. And the translation of these two stages is independently each other, and this can be found in the following figure, where the continuous virtual addresses can be mapped to the discrete addresses in intermediate physical pages, and discrete intermediate physical addresses can be mapped to the continuous physical addresses.

Figure 6. 2-stage Address Translation Mechanism

The following diagram shows the typical 2-stage address space of ARMv8 architecture. The three virtual address spaces include the guest OS virtual memory map in Non-secure EL0/EL1, the hypervisor virtual memory map in No-secure EL2, and the secure monitor virtual memory map in EL3. Each of these virtual address spaces is independent and has its own settings and tables. The 2-stage address translation is typically used in the virtualization, where the Stage1 guest OS tables translate virtual addresses to intermediate physical addresses, including the peripherals, memories, and storages and the Stage2 virtualization tables translate the intermediate physical addresses to physical addresses.

Figure 7. ARMv8 Typical 2-stage Address Space

In AArch64 architecture, 4-level page tables with typical 4KB/64KB page size are used in MMU to support address translation introduced above. The detailed procedure of address translation will not be introduced further in this paper.

Figure 8. AArch64 4-level Page Table & 1-stage Address Translation Example

4. Device Virtualization

4.1 Device Virtualization overview

The concept of the devices in the virtualization can be concluded as the accessible devices in the system except the processors running the hypervisor and VMs. The typical types of devices in automotive chips can be concluded as:

- Emulated virtualized device: emulates an actual physical device for VMs, all interacting with this device through hypervisor traps.

- Para-virtualized device: emulates an actual physical device for all VMs, while all VMs can interact with this device with batched calls or batched orders through single trap.

- Pass-through device: exactly exclusively available to a specific VM in the virtualized environment.

- Shared device: can be used by one VM, or by one or more VMs and the hypervisor itself.

The following chapters will introduce the detailed implementation of the device virtualization in the QNX hypervisor with the support of ARM system memory management unit.

4.2 QNX Device Virtualization Support

The devices in the QNX Hypervisor are concluded as the physical devices, including pass-through devices and shared devices, and the virtual devices, including virtualized and para-virtualized devices. The physical devices and virtual devices (vdevs) should be assigned to the hypervisor and to the VMs when instancing a qvm process for VM configuration.

Figure 9. Device Virtualization Supported in QNX Hypervisor

For pass-through devices, the VM has direct and exclusive access to them, and the hypervisor host will be bypassed, thus the drivers for these devices are owned by the VM directly. With a pass-through device, the hypervisor host domain only knows that it must route interrupts from the physical device directly to the VM, and pass all signals from the VM directly to the device. All interactions are between the VM and the device. The hypervisor needs to identify and allow to pass through the interrupts from the device, and the signals from the VM. The typical examples of pass-through devices are PCIe, Ethernet etc.

For shared devices, these can be used by more than one VM, and typical example is shared memory. The QNX Hypervisor supports two different types of device sharing, which are referred sharing and mediated sharing:

- Referred sharing: the shared device is assigned as pass-through device to one specific VM, which also manages the driver of this device, and other authorized VMs will access this device through the virtual device.

- Mediated sharing: all the VMs access this device through virtual device and the hypervisor host has the control of this device and manages the driver.

For virtual devices, the QNX Hypervisor supports both emulation vdevs and para-virtualized vdevs, isolating the direct communication between the physical devices on the system:

- An emulation vdev is a virtual device that emulates an actual physical device for a VM. To use the vdev, a VM does not need to know that it is running in a virtualized environment. Depending on the type of physical device, the emulation vdev may either handle everything itself, or it may act as an intermediary between the VM and an actual physical device. Typical examples of emulation vdevs are GIC, Timers etc.

- Comparing with the emulation vdevs, the para-virtualized devices can improve the efficiency through batched process for single exception trap while the emulation vdevs should cause the exception trap for all single processes. The QNX Hypervisor supports VirtIO1.0 para-virtualized standard to support most of the para-virtualized devices, including block devices, I/O devices, consoles, GPU, Display, ISP etc.

4.3 ARM Device Virtualization Support

Main device virtualization supports in ARM architecture are system memory management unit and general interrupt controller with virtualization extension.

For masters except processors in the system, especially the DMA controllers or the master with DMA blocks, when allocated by a guest OS in the virtualization scenario, the address translation and OS level memory protection can be ensured through the SMMU. The left diagram below shows the DMA controller in the operating system’s view without the virtualization support. The DMA controller will be programmed via a driver in the kernel space of host OS, which will configure the DMA with physical addresses relying on the MMU unit. However, in the virtualization environment, the pass-through DMA for specific VM can only be configured with intermediate physical address relying on the stage1 address translation for this VM. Therefore, the right diagram below shows the system memory management unit used for virtualization support for this DMA controller, which can be configured in the VM with IPA. And then all the memory accesses can be translated from IPA to PA by the SMMU programed by the hypervisor host to make sure that the VM and the DMA has same view of memory.

Figure 10. SMMU for Virtualization Support in ARM Architecture

From Arm GICv2, the GIC can signal both physical and virtual interrupts, by providing a physical CPU interface and a virtual CPU interface, as shown in the following diagram. These two interfaces are identical, except that one signals physical interrupts and the other one signals virtual interrupts. The hypervisor can map the virtual CPU interface into a VM, allowing software in that VM to communicate directly with the GIC. The advantage of this approach is that the hypervisor only needs to set up the virtual interface and does not need to emulate it. This approach reduces the number of times that the execution needs to be trapped to EL2, and therefore reduces the overhead of virtualizing interrupts.

Figure 11. GIC Virtualization Support in ARM Architecture

The End

Virtualization is rapidly becoming a critical technology in the software architecture ofmodern vehicles, especially with the challenges of safety isolation, the balance of flexibility and utilization in the cockpit SoCs. The cooperation of the hypervisor software with hardware virtualization extension can make the virtualization features in the cockpit scenario more efficiently and safely.

Reference

[1]https://www.qnx.com/developers/docs/7.0.0/#com.qnx.doc.hypervisor.nav/topic/bookset.html

[2]https://blackberry.qnx.com/content/dam/qnx/products/hypervisor/hypervisorAutomotive-ProductBrief.pdf

[3]https://developer.arm.com/documentation/102142/0100

[4]https://developer.arm.com/documentation/101811/0102/

[5]http://xhypervisor.org/

[6]Garrido, José & Schlessinger, Richard. (2007). Principles of Modern Operating Systems.

[7]https://docs.oasis-open.org/virtio/virtio/v1.1/csprd01/virtio-v1.1-csprd01.html

复睿微电子:

复睿微电子是世界500强企业复星集团出资设立的先进科技型企业。复睿微电子植根于创新驱动的文化,通过技术创新改变人们的生活、工作、学习和娱乐方式。公司成立于2022年1月,目标成为世界领先的智能出行时代的大算力方案提供商,致力于为汽车电子、人工智能、通用计算等领域提供以高性能芯片为基础的解决方案。目前主要从事汽车智能座舱、ADS/ADAS芯片研发,以领先的芯片设计能力和人工智能算法,通过底层技术赋能,推动汽车产业的创新发展,提升人们的出行体验。在智能出⾏的时代,芯⽚是汽⻋的⼤脑。复星智能出⾏集团已经构建了完善的智能出⾏⽣态,复睿微是整个⽣态的通⽤⼤算 ⼒和⼈⼯智能⼤算⼒的基础平台。复睿微以提升客户体验为使命,在后摩尔定律时代持续通过先进封装、先进制程和解决⽅案提升算⼒,与合作伙伴共同⾯对汽⻋智能化的新时代。